AI companion apps like Candy AI have exploded in popularity, promising emotional support, realistic conversations, and even intimate roleplay. But if you’ve ever shared personal thoughts with Candy AI, you might have asked yourself a serious question: Is Candy AI safe to use?

Many users reported mixed experiences — some praising Candy AI for easing loneliness, while others worry about data privacy, adult content risks, and even the app suddenly disappearing from app stores. In this article, we’ll break down everything you need to know: Candy AI’s privacy and security, what real users are saying, why the platform faced shutdown rumors, and which alternatives you should consider.

Let’s dive in! 🚀

- Table Of Contents

- Part 1: Candy AI Privacy and Data Security

- Part 2: Is Candy AI Legit or Shut Down?

- Part 3: Candy AI User Reviews – Positive and Negative

- Part 4: Candy AI and NSFW Content Risks

- Part 5: Best Candy AI Alternatives

- Part 6: Final Verdict – Is Candy AI Safe to Use?

PART 1. Candy AI Privacy and Data Security

The number one concern with any AI companion is privacy. Candy AI users often treat the app like a virtual therapist or partner, sharing intimate details they wouldn’t even tell close friends. That naturally raises questions:

Question:

Does Candy AI store your chats?

Is your data being used to train AI models?

Could your private conversations ever leak?

According to its privacy policy, Candy AI uses industry-standard encryption and claims not to sell personal data to third parties. Some users say this makes it more transparent than smaller “sketchy” AI companion platforms.

On Reddit, however, opinions are divided. Some users praised Candy AI for being clearer than most about how conversations are handled, while others expressed paranoia after realizing they had shared things they “wouldn’t even tell their best friend.” A few commenters reminded that GenAI tools aren’t built for therapy, and using them as a replacement for professional help can be risky if sensitive chats are ever exposed or misused.

Still, experts and users alike recommend caution:

- Don’t share sensitive financial or medical information.

- Use a separate email address.

- Review the app’s privacy updates regularly.

Bottom line:

- Candy AI is not uniquely unsafe, but like all AI companions, you should set personal boundaries and treat it as entertainment rather than confidential counseling.

PART 2. Is Candy AI Legit or Shut Down?

In early 2025, reports started circulating that Candy AI’s website and app had gone offline. Some users speculated it was banned by Google due to its NSFW (adult) content, while others pointed to payment processor issues that disrupted subscriptions.

Reddit discussions revealed mixed realities:

- Some users could still access Candy AI on iPhone through non-App Store downloads, though stability was inconsistent.

- Others reported receiving refunds, suggesting financial or operational troubles behind the scenes.

- A few bluntly declared “RIP Candy AI,” implying a partial or permanent shutdown.

Interestingly, one commenter noted that ads for Candy AI’s “AI girlfriends” had shown up on platforms like YouTube — which many saw as evidence of its heavy adult orientation and potential conflict with mainstream app store policies. Another user suggested the service was “nuked by Google for NSFW content,” highlighting how its explicit positioning may have backfired.

This uncertainty underscores a key issue: platform reliability. Even if the service works well today, its long-term availability is not guaranteed. Unlike more established AI companions such as Replika or Character AI, Candy AI’s business model and content choices may put it at higher risk of bans or sudden shutdowns.

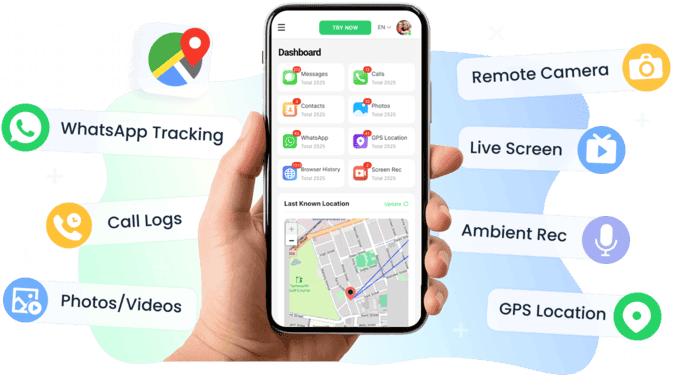

For parents, this raises an extra red flag. If ads for AI girlfriends can appear on mainstream platforms like YouTube, children might accidentally be exposed to inappropriate content. That’s why tools like VigilKids matter: it helps parents monitor what their kids are viewing online, track app usage, and block risky content before it reaches them. Instead of worrying about surprise encounters with NSFW ads or chatbots, parents can stay informed and keep their kids’ digital environment safe.

PART 3. Candy AI User Reviews – Positive and Negative

User experiences with Candy AI are highly polarized.

- Natural conversations: Feels like chatting with a real person.

- Voice chat quality: Clearer than Replika and Character AI.

- Image generation: Surprisingly realistic, often better than dedicated AI art tools.

- Memory retention: The app remembers personal details and storylines across sessions.

- Emotional support: Remote workers and lonely users say it reduced their isolation.

- High cost: $13.99/month unless you catch discounts.

- Memory glitches: Characters sometimes forget past details.

- Repetitive replies: Extended chats reveal patterns.

- Customer support delays: Often 24+ hours to reply.

- Image bugs: AI occasionally produces disturbing visuals (extra limbs, etc.).

One detailed reviewer logged six weeks of testing, reporting 127 conversations, 89 images generated, and a 70% drop in loneliness.

Takeaway:

- Candy AI can feel immersive if you invest time in setup, but some users find it overpriced and inconsistent.

PART 4. Candy AI and NSFW Content Risks

Candy AI is not just for friendly chats — it leans heavily into adult-oriented content, which is both a draw and a risk.

-

NSFW features: Explicit image generation, roleplay scenarios, and premium “private content” videos.

-

User experiences: Some praised the creative freedom, while others said the adult features were “not worth the price” compared to free content online.

-

Platform risks: Its NSFW orientation is likely why Candy AI faced app store bans and payment restrictions.

For parents, this is especially concerning. A few users noted that inappropriate Candy AI ads (like “AI girlfriends”) have even shown up on platforms such as YouTube — reinforcing the need for parental controls.

PART 5. Best Candy AI Alternatives

If you’re looking for a safer or more stable AI companion, here are the top alternatives users recommend:

-

Replika: Best for emotional support and general companionship. Limited NSFW.

-

Character AI: Free and fun for roleplay, though less realistic.

-

HeraHaven / Talkie AI: More open to adult-oriented interactions.

-

MyAnima: Simple, casual AI friend app.

-

Crushon: Strong character consistency, customizable memory, multi-character roleplay.

Each has pros and cons, but all are considered more reliable than Candy AI at the moment.

Part 6: Final Verdict – Is Candy AI Safe to Use?

So, is Candy AI safe? The answer depends on what kind of safety you’re asking about:

-

Privacy & data: Reasonably protected, but don’t overshare.

-

Platform stability: Questionable, with shutdown and refund reports.

-

User experience: Mixed — immersive for some, glitchy and overpriced for others.

-

Content safety: Adult-oriented, not appropriate for younger users.

👉 Who should try Candy AI?

- Remote workers battling loneliness.

- Adults seeking immersive AI conversations and image generation.

- Users who don’t mind experimenting with NSFW roleplay.

👉 Who should avoid it?

- Minors and families.

- Privacy-conscious users unwilling to take risks.

- Anyone looking for a free or purely platonic AI companion.

Final word:

Candy AI is not inherently unsafe, but it comes with privacy, reliability, and NSFW concerns. If you decide to use it, do so mindfully — and always keep alternatives in mind.